3. Wave Hacks Journey - 45-Day Sprint

"Learning by doing" - We experienced fundamentals in Wave3 and validated value in Wave4. The insights gained over these 45 days became practical knowledge that could never be obtained through desk planning alone.

Wave3 (February 2025) - Proof of Technology

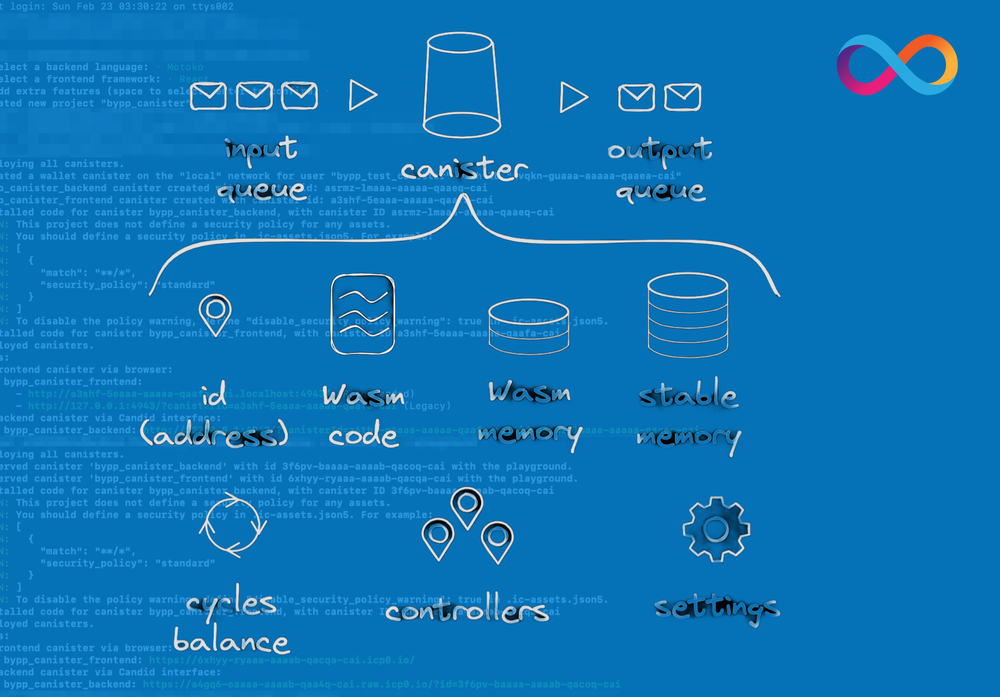

Technology Foundation Establishment

- Sample Canister deployment to Playground, complete mastery of ICP workflow

- Mac environment setup documentation ensuring other developers can replicate the environment within 48 hours

- Mock customer interviews to reconfirm pain points - quantifying voices saying "too many apps" and "setup is scary"

Critical Discovery

Beyond technical complexity, "psychological complexity" emerged as the greatest bottleneck in user experience. Users weren't lamenting feature gaps but expressing anxiety about "not understanding" and "fear of making mistakes."

Wave4 (February-March 2025) - Proof of Experience

Experience Value Validation

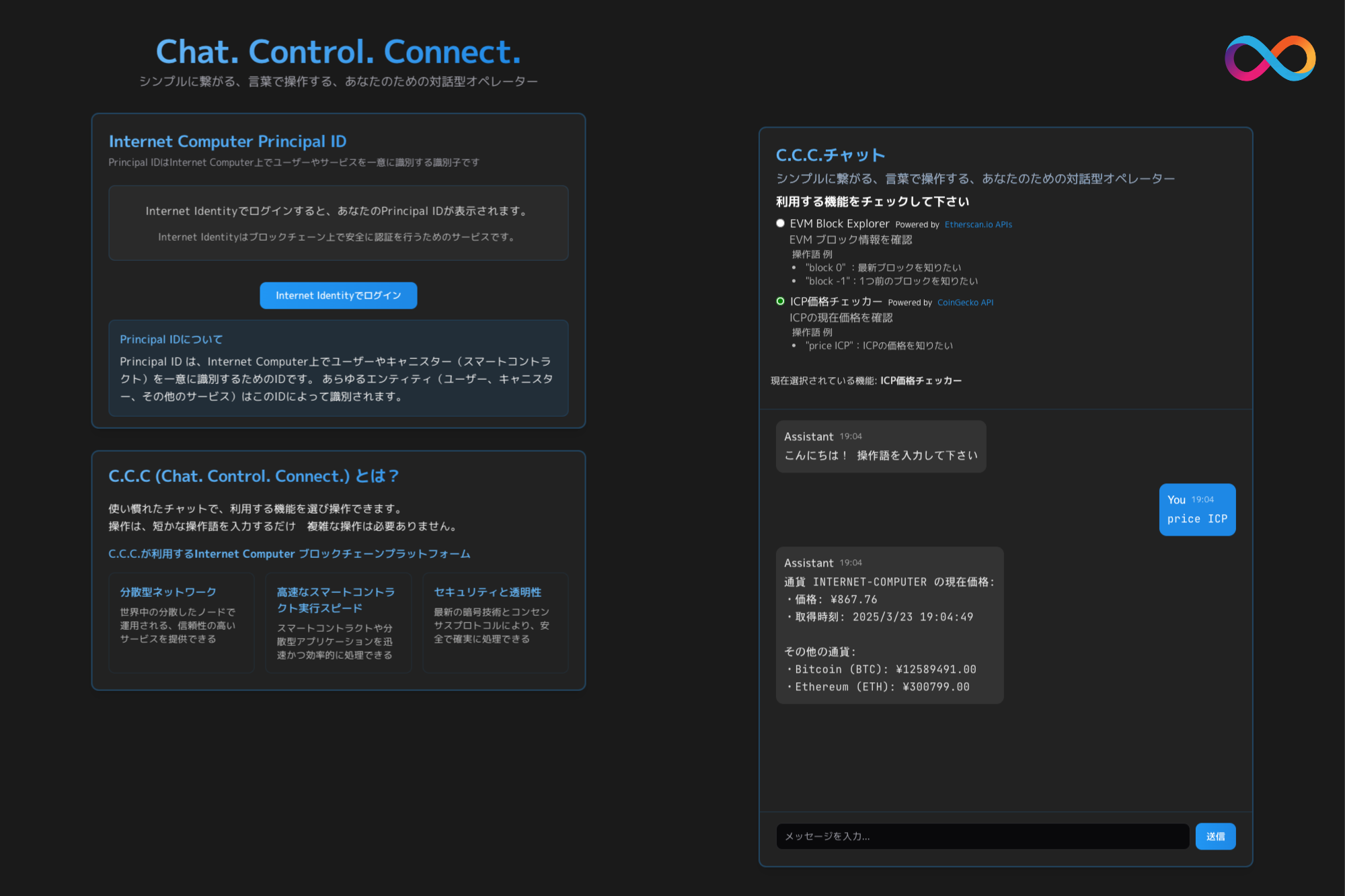

1. Chat UX Prototype Development

- Internet Identity login → Principal generation completed in 3 clicks

- Natural language parsing like "Tell me ICP's price" presenting "menu-style operation language"

- Transition from "memorize" UI to "inquire" UI realized

2. External Data Integration Implementation

- HTTPS outcall implementation for Etherscan and CoinGecko API retrieval

- "Visualization" making blockchain information intuitive even for unfamiliar users

- Real-time data retrieval enabling dynamic value delivery beyond static information display

3. Positive Evaluation from Judge Comments

The project was evaluated for demonstrating the possibility of running AI services on IoT devices while maintaining data sovereignty using ICP's decentralized architecture. Technically, expectations were raised for evolution from current regex matching to combinations of LLM ToolUse and MCP, with positive assessment of feasibility.

For example, many companies currently use AI for meeting transcription. The challenge is that transcription data and recording data itself are being learned by Web2 AI services and stored in centralized clouds. When this involves corporate secrets or national security content, it creates incentives for hackers or internal incident actors to steal it. If we assume IoT devices are like home speakers and can run AI services linked with IoT data, it would be very interesting. Many companies currently have strict IoT data handling policies. Looking forward to future development!

Thank you for utilizing ICP.ninja as well. Including the integration with external oracle data using HTTPS outcalls, I have high expectations for future scalability and matching with usage scenario demands (such as IoT device operation through chat). I think it would be great to see more specificity regarding where to position the advantages of being decentralized.

I found the concept of leveraging ICP's high security to realize conversational operations with the real world (IoT devices) extremely interesting. While I think this could be achieved with Web2 as well, it would be wonderful to have strengths that 'only ICP can solve,' such as utilizing features like ManagementCanister to provide user-sovereign device lending functionality by linking IoT devices and Canisters one-to-one. Currently, you're providing function calls through regex matching, but I could envision good compatibility when combined with LLM ToolUse (FunctionCalling) and MCP (Model Context Protocol), and if using small LLMs, it could be realized even in mobile apps. I also hope you'll address mainnet Canister deployment and external API integration through HttpOutCalling in future updates. I'm personally very interested in IoT×AI and exploring its feasibility, so I'm looking forward to C.C.C.'s development!

4. Insights More Important Than Technical Achievements

Chat UI was revealed to fundamentally transform "user-system relationships," not just serve as an interface. Traditional GUIs required learning "correct operation procedures," while chat allows operation by simply expressing "what you want to do."

Additionally, transitioning from "command-based strict grammar operations" to "natural language dialogue allowing fuzzy elements" dramatically reduces operational difficulty. However, we recognized the need for mechanisms to safely execute operations. Specifically, C.C.C.'s dialogue input will support QWERTY and voice input, while operations will present selection buttons for content confirmation before one-tap execution.